Abstract

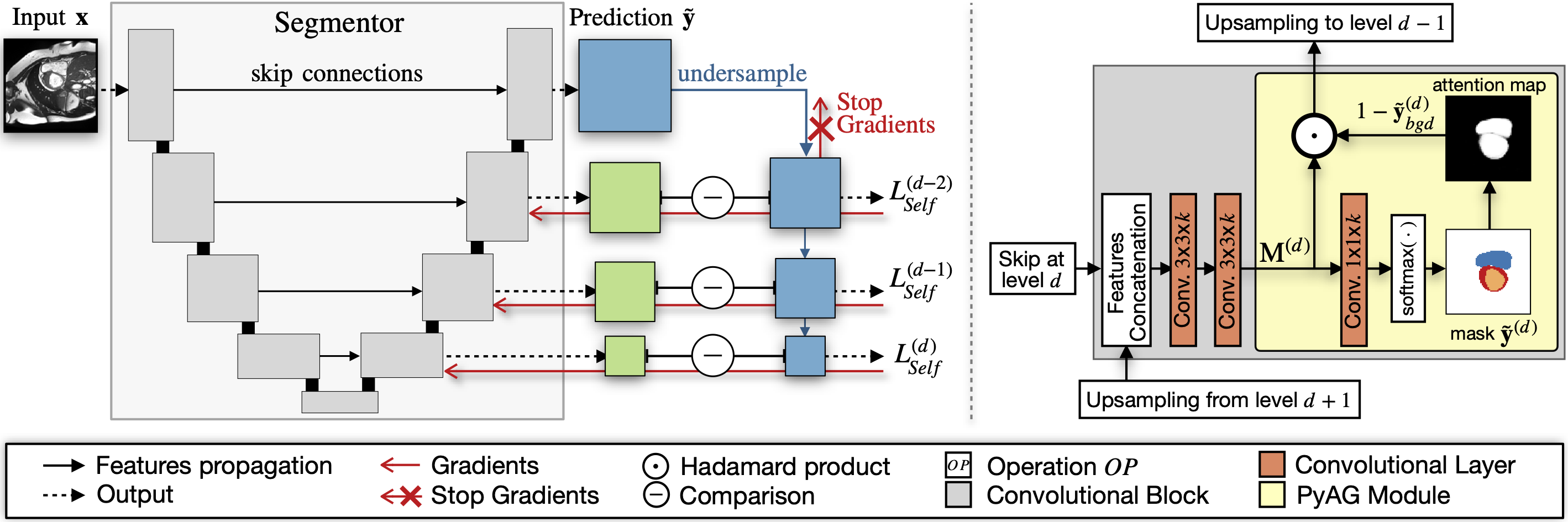

Collecting large-scale medical datasets with fine-grained annotations is time-consuming and requires experts. For this reason, weakly supervised learning aims at optimising machine learning models using weaker forms of annotations, such as scribbles, which are easier and faster to collect. Unfortunately, training with weak labels is challenging and needs regularisation. Herein, we introduce a novel self-supervised multi-scale consistency loss, which, coupled with an attention mechanism, encourages the segmentor to learn multi-scale relationships between objects and improves performance. We show state-of-the-art performance on several medical and non-medical datasets.

Keywords

Self-supervised Learning | Segmentation | Shape prior

Cite us:

@incollection{valvano2021self,

title={Self-supervised Multi-scale Consistency for Weakly Supervised Segmentation Learning},

author={Valvano, Gabriele and Leo, Andrea and Tsaftaris, Sotirios A},

booktitle={Domain Adaptation and Representation Transfer, and Affordable Healthcare and AI for Resource Diverse Global Health},

pages={14--24},

year={2021},

publisher={Springer}

}

Don’t miss any update!

You can either: